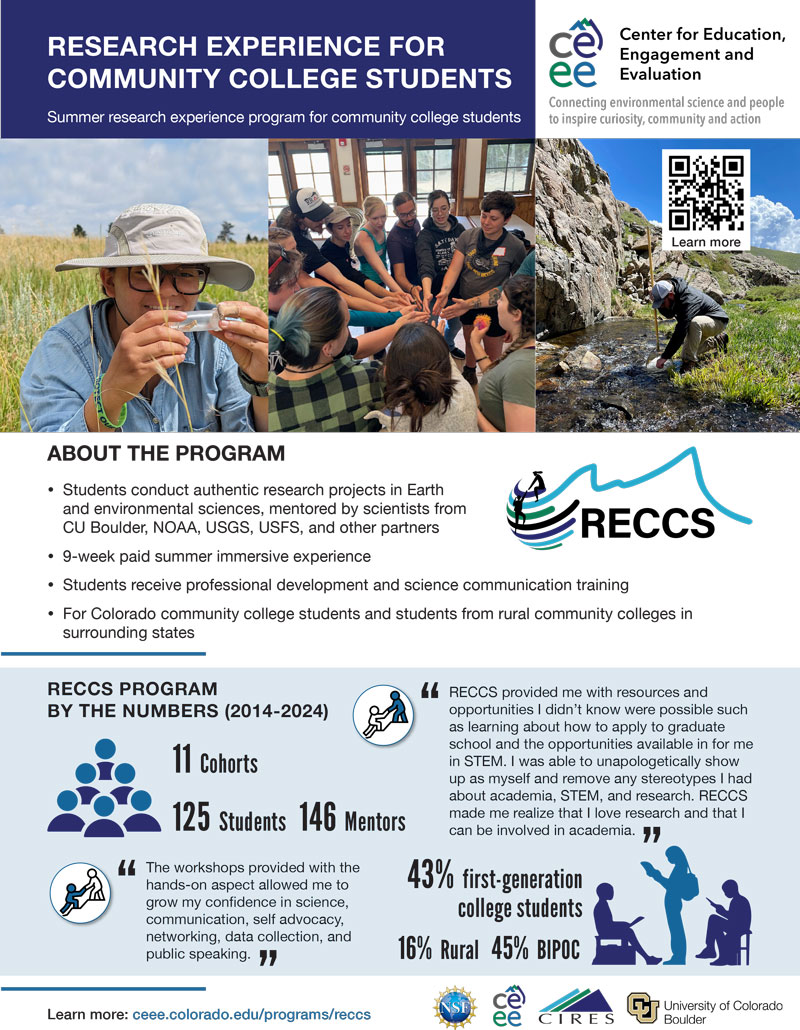

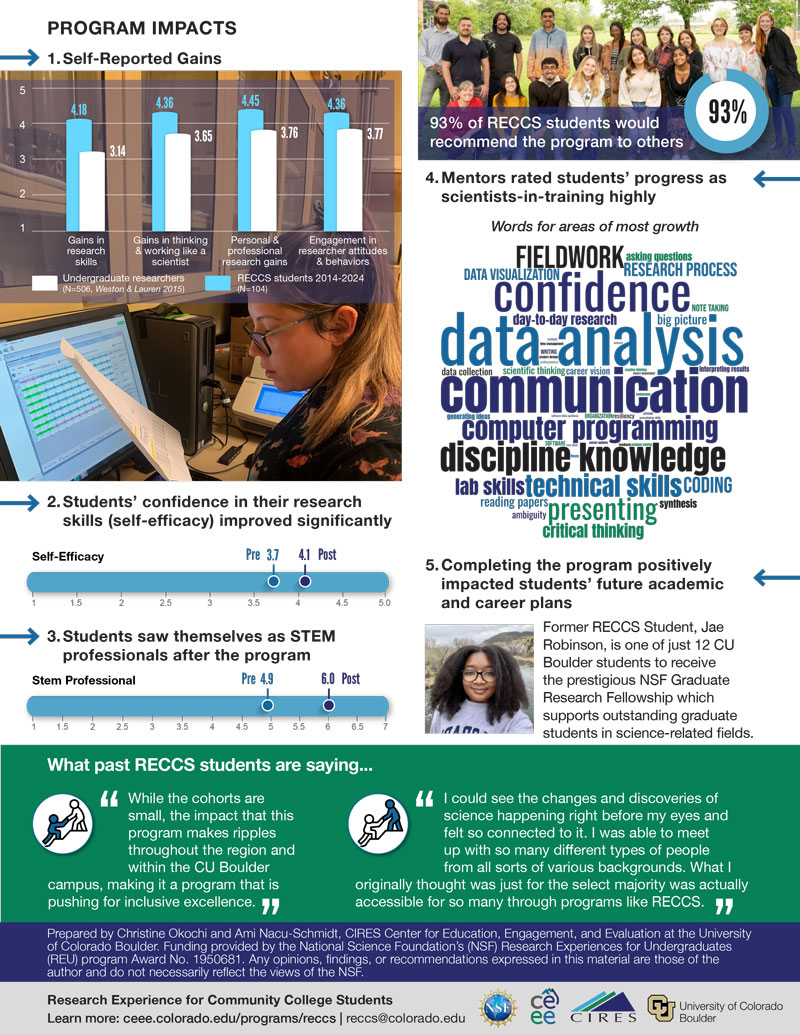

Our team currently evaluates several NSF-funded Research Experiences for Undergraduates (REU) programs and Course-based Undergraduate Research Experiences (CUREs). We work with program coordinators and instructors from the proposal stage through the final program impact report. Evaluation activities can include custom formative surveys for program improvement and refinement; validated quantitative measures that assess program outcomes such as science self-efficacy, science identity, and career plans; and qualitative measures, such as focus groups and interviews, for a deeper understanding of students’ experiences in a program or course.

See an example evaluation impact story from the Research Experience in Alpine Meteorology (REALM) program, a summer research experience for undergraduate students.

Our team evaluates curricula, teaching resources, and teacher trainings and their impact on teachers and their students. We typically evaluate curricula throughout development and implementation, including iterative formative evaluation during the development phase; evaluation of professional development training for teachers to learn how to implement the curriculum; evaluation of student data and teacher implementation data; and web analytics to assess the reach and use of curricula. We have conducted needs assessments in the early stages of projects to determine teacher demand and to help project teams design and deliver successful and relevant curricula to classrooms.

See an example evaluation report from the HEART Force program, an education program focused on building community resilience that includes a curriculum, student community action project, scenario-based role-play games, teacher training, and a teacher community of practice:

Our team evaluates several informal, or outside-of-the-classroom, education projects including planetarium films, library exhibits, community events, and film festivals. We work closely with project teams to conduct needs assessments and exhibit usability studies. We select tailored evaluation techniques when collecting audience feedback, translate evaluation instruments into the primary language of audiences, and use accessible language. We track visitor engagement through touch-screen interactive surveys, hands-on activities integrated into the exhibit, number of downloads of digital resources, web analytics, and participation and attendance numbers. We interview project partners and participants and conduct listening sessions to support the development of the program to meet the audience or community needs and better understand the impact of the project on local learning ecosystems and capacity building in communities.

See an example evaluation report from the We are Water (WaW) project, which includes an exhibition that is currently traveling to rural and Tribal libraries across the Southwest.

Our team provides formative and summative evaluation using collaborative approaches with project teams and partners for data centers, community offices, and other large, multi-organization projects. CEEE provides collaborative evaluation with leadership teams and guidance on developing a logic model and theory of change. We provide process evaluation, impact measures and feedback on events, tracking methods for project deliverables and measures of success, conduct social network analysis to document the evolution of emerging networks, and provide ongoing evaluation to assess the extent of change from those activities. We often contribute to reporting as well as publications about data centers and community offices.

See an example evaluation report from the NSF-funded Environmental Data Science Innovation and Inclusion Lab (ESIIL), a data synthesis center focused on advancing environmental data science and leveraging the wealth of environmental data and innovative analyses to develop science-based solutions to solve pressing challenges in biology and other environmental sciences.

See an example evaluation report from the Navigating the New Arctic Community Office (NNA-CO), which supports all NSF-funded NNA Arctic Research projects and their work by building awareness, partnerships, opportunities, and resources for collaboration and equitable knowledge generation through research design and implementation, and coordinates effective knowledge dissemination, education, and outreach.

Our team contributes to geoscience education research on topics including spatial reasoning, sense of place, environmental action and sense of agency in youth, climate and resilience education, geoscience career development, and systems thinking.

See all CEEE publications or read about a few of our education research publications below:

- Gold AU, Geraghty Ward EM, Marsh CL, Moon TA, Schoeneman SW, Khan AL, et al. (2023). Measuring novice-expert sense of place for a far-away place: Implications for geoscience instruction. PLoS ONE 18(10): e0293003. https://doi.org/10.1371/journal.pone.0293003

- Littrell, M. K., Gold, A. U., Kosley, K.L.K., May, T.A., Leckey, E., & Okochi C. (2022). Transformative experience in an informal science learning program about climate change. Journal of Research in Science Teaching, 1-25. https://doi.org/10.1002/tea.21750

- Gold, A.U., Pendergast. P., Ormand, C., Budd, D., Mueller, K. (2018): Improving Spatial Thinking Skills among Undergraduate Geology Students through short online Training Exercises. International Journal for Science Education. https://doi.org/10.1080/09500693.2018.1525621